#include <stdio.h>

#include <opencv2/opencv.hpp>

#include <opencv2/dnn.hpp>

#include <opencv2/highgui.hpp>

#include <iostream>

#include <opencv2/core/ocl.hpp>

#include <iostream>

using namespace cv;

using namespace std;

const size_t width = 500;

const size_t height = 120;

size_t det_index;

const float scaleFector = 0.007843f;

const float meanVal = 127.5;

dnn::Net net;

const char* class_video_Names[] = { "background",

"yiwu"};

Mat detect_from_video(Mat &src,float &x1,float &y1,float &x2,float &y2,size_t &det_index)

{

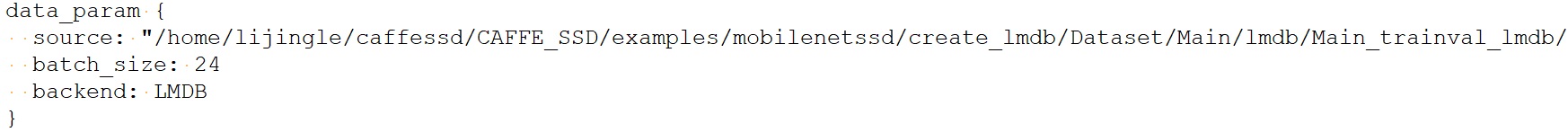

Mat blobimg = dnn::blobFromImage(src, scaleFector, Size(width, height), Scalar(127.5, 127.5, 127.5));

net.setInput(blobimg, "data");

Mat detection = net.forward("detection_out");

//cout << detection.size[2]<<" "<< detection.size[3] << endl;

Mat detectionMat(detection.size[2], detection.size[3], CV_32F, detection.ptr<float>());

const float confidence_threshold = 0.75;

for(int i=0; i<detectionMat.rows; i++){

float detect_confidence = detectionMat.at<float>(i, 2);

if(detect_confidence > confidence_threshold){

det_index = (size_t)detectionMat.at<float>(i, 1);

x1 = detectionMat.at<float>(i, 3)*src.cols;

y1 = detectionMat.at<float>(i, 4)*src.rows;

x2 = detectionMat.at<float>(i, 5)*src.cols;

y2 = detectionMat.at<float>(i, 6)*src.rows;

}

}

return src;

}

Mat changeImgLeft(Mat img1,Mat dst)

{

Mat roi;

cout<<img1.rows<<img1.cols<<endl;

roi = Mat::zeros(img1.size(),CV_8U);

imshow("roi", roi);

vector<vector<Point>> contour_left;

vector<Point> pts_left;

pts_left.push_back(Point(640, 281));

pts_left.push_back(Point(246, 257));

pts_left.push_back(Point(244, 247));

pts_left.push_back(Point(97, 237));

pts_left.push_back(Point(83, 168));

pts_left.push_back(Point(640, 160));

contour_left.push_back(pts_left);

drawContours(roi,contour_left,0,Scalar::all(255),-1);

imshow("roi_d", roi);

img1.copyTo(dst,roi);

imshow("dst", dst);

return dst;

}

int main(int argc,char ** argv)

{

float f,x1=0.0,y1=0.0,x2=0.0,y2=0.0;

float FPS[16];

int i, Fcnt=0;

int count =0;

VideoWriter vw1;

Size dsize = Size(300, 300);

Mat img2 = Mat(dsize, CV_32S);

Mat frame,CloseimgROI,CloseImgLeft;

chrono::steady_clock::time_point Tbegin, Tend;

net = dnn::readNetFromTorch("csv_retinanet_5.pt");

if (net.empty()){

cout << "init the model net error";

exit(-1);

}

VideoCapture capture;

frame= capture.open("./2021-01-06-14-22-02-L.mp4");

if(!capture.isOpened())

{

printf("can not open ...\n");

return -1;

}

vw1.open("save.mp4", VideoWriter::fourcc('m', 'p', '4', 'v'), 16, Size(640, 480));

if (!vw1.isOpened())

{

cout << "open vw1 faild" << endl;

return -1;

}

//cout << "Switched to " << (cv::ocl::useOpenCL() ? "OpenCL enabled" : "CPU") << endl;

//net.setPreferableTarget(DNN_TARGET_OPENCL);

cout << "Start grabbing, press ESC on Live window to terminate" << endl;

while(capture.read(frame)){

//frame=imread("2.jpg"); //need to refresh frame before dnn class detection

CloseimgROI = changeImgLeft(frame, CloseimgROI);

CloseImgLeft = CloseimgROI(Range(168, 289), cv::Range(83, 640));//将核心区域裁剪为矩形

count++;

Tbegin = chrono::steady_clock::now();

detect_from_video(CloseImgLeft,x1,y1,x2,y2,det_index);

Tend = chrono::steady_clock::now();

//calculate CloseImgLeft rate

f = chrono::duration_cast <chrono::milliseconds> (Tend - Tbegin).count();

if(f>0.0) FPS[((Fcnt++)&0x0F)]=1000.0/f;

for(f=0.0, i=0;i<16;i++){ f+=FPS[i]; }

putText(frame, format("FPS %0.2f", f/16),Point(10,20),FONT_HERSHEY_SIMPLEX,0.6, Scalar(0, 0, 255));

//show output

imshow("CloseImgLeft", CloseImgLeft);

Rect rec((int)x1+112, (int)y1+205, (int)(x2 - x1), (int)(y2 - y1));

rectangle(frame,rec, Scalar(0, 0, 255), 2, 8, 0);

putText(frame, format("%s", class_video_Names[det_index]), Point(x1+112, y1-5+205) ,FONT_HERSHEY_SIMPLEX,1.0, Scalar(0, 0, 255), 2, 8, 0);

resize(CloseImgLeft, img2, dsize);

imshow("frame", frame);

if(count==1550 )

{

//vw1 << frame;

cv::imwrite("save.jpg", frame);

}

x1=0.0;

y1=0.0;

x2=0.0;

y2=0.0;

det_index = 0.0;

char esc = waitKey(5);

if(esc == 27) break;

}

vw1.release();

cout << "Closing the camera" << endl;

destroyAllWindows();

cout << "Bye!" << endl;

return 0;

}